Changelog News

Developer news worth your attention

Jerod here! 👋

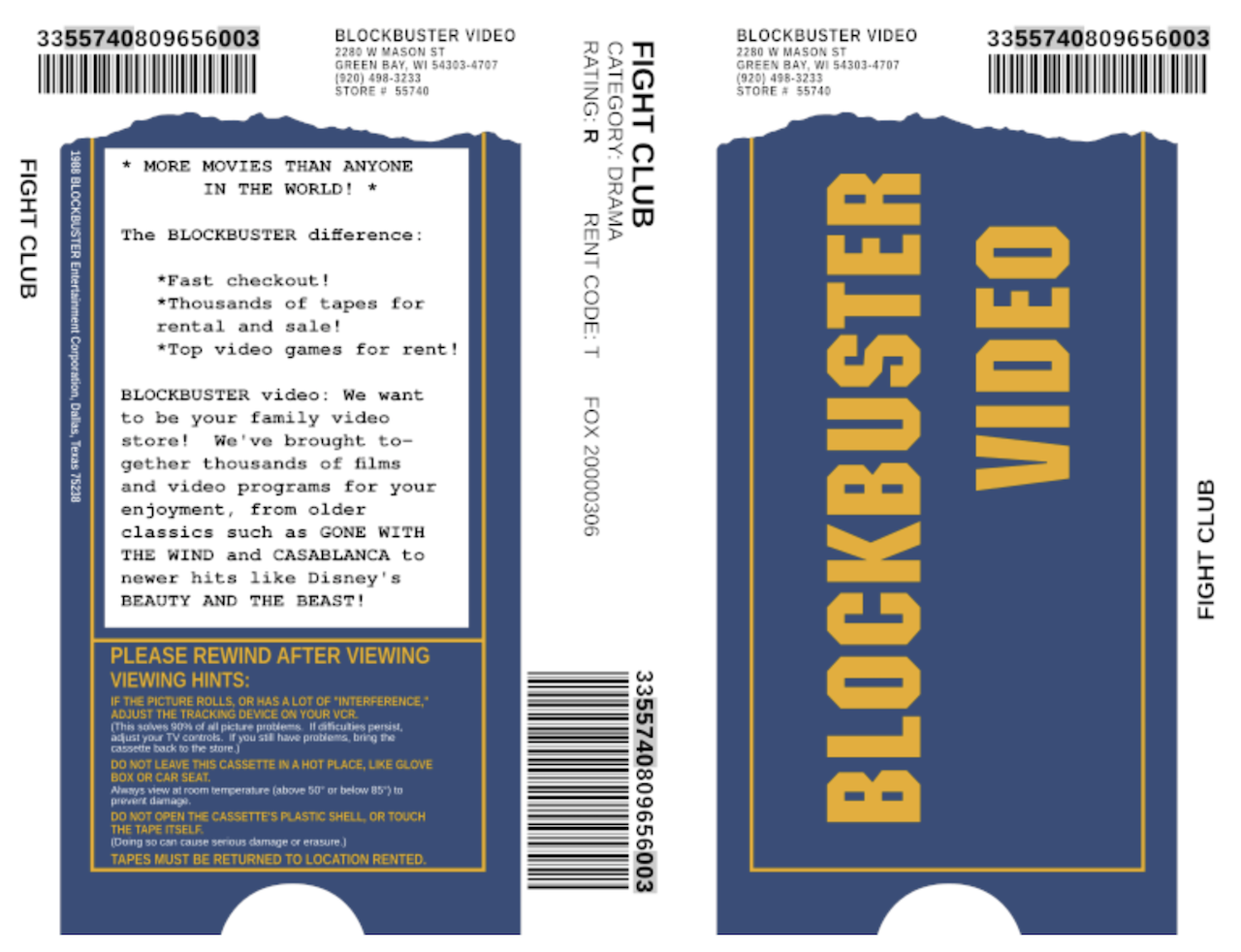

If our Winamp/Napster talk with Jordan Eldredge pushed your nostalgia buttons at all, then Ryan Finnie’s recreation of Blockbuster Video’s VHS insert (editable via SVG) should hit hard, too.

Be kind, rewind! Ok, let’s get into this week’s news.

🎧 Simply the best pods for devs

🎙️ Why we need Ladybird (Andreas Kling & Chris Wanstrath)

💚 The Winamp era (Jordan Eldredge)

🚀 Cloud-centric security logging (Steven Wu)

🪩 Forging Minecraft’s scripting API (Raph Landaverde & Jake Shirley)

🤖 Only as good as the data (Daniel & Chris)

⏰ Big shoes to fill (Kris, Angelica & Johnny)

🗯️ Quote of the week

“Searching for facts on the internet is a bit like foraging for mushrooms. Doesn’t matter how experienced you are… you should double check every single one.” – Evan Travers

✊ Practices of reliable software design

Chris Stjernlöf got nerd-sniped. Out of the blue, a friend asked him how he would build an in-memory cache, laying out a few design constraints. He couldn’t not take the bait.

In the process of answering the question and writing the code, I discovered a lot of things happened in my thought processes that have come with experience. These are things that make software engineering easier, but that I know I wouldn’t have considered when I was less experienced.

He jotted down eight practices that he’s adopted with experience and used while writing a fast, small, in-memory cache. They are:

- Use Off-The-Shelf

- Cost And Reliability Over Features

- Idea To Production Quickly

- Simple Data Structures

- Reserve Resources Early

- Set Maximums

- Make Testing Easy

- Embed Performance Counters

A few of these I live by. A few of these I hadn’t really considered. I imagine every experienced dev has a similar list floating around in their head. That’d be an aggregation project worthy of the effort…

⚰️ Micro-libraries need to die already

Ben Visness has had enough with the npm community’s propensity to pull in micro-libraries to suit every need.

Here is my thesis: Micro-libraries should never be used. They should either be copy-pasted into your codebase, or not used at all.

However, my actual goal with this article is to break down the way I think about the costs and benefits of dependencies. I won’t be quantifying these costs and benefits, but I hope that by explaining the way I think about dependencies, it will be clear why I think micro-libraries are all downside and no upside.

“Micro” is a subjective measure, but Ben is talking about single function kind of libraries. The case study he uses is is-number, which certainly qualifies by any measure. I whole-heartedly agree with him. A saying I learned from years of producing Go Time comes to mind: “A little copying is better than a little dependency.” (src)

🎣 Harvesting, fishing, panning for gold

I like this set of metaphors for how to think about bucketing challenges into different categories. Some problems are like harvesting:

Harvesting problems have straightforward solutions and no shortcuts: You just get a big basket and pick every damn strawberry in the field. You solve these problems with pure perseverance, slogging away for weeks, months, or years until they are done.

Some problems are like fishing. You know that there are fish out there in the ocean, but you don’t know exactly where. If a great fisherman knows where the hungriest fish are and how to set their lines just right, they might catch everything that they need in a few hours. Fishing problems can sometimes be solved shockingly fast by motivated teams with a bit of luck.

Some problems are like panning for gold – going out to a river or stream where there might be gold, getting your pan out, and seeing if you can find traces of the shiny stuff in the sediment. If you find gold, you can become generationally successful – think of the massive moats created by Google Search or the AirBnB network.

If you can categorize the problem you’re trying to solve into one of these buckets, applicable strategies become more clear.

💰 Supabase Launch Week 12 recap

Thanks to Supabase for sponsoring Changelog News

Last week was Launch Week 12 for Supabase. Here’s a recap of what they shipped:

Monday: They launched postgres.new - an in-browser Postgres with an AI interface.

Tuesday: Authorization for Realtime’s Broadcast and Presence went public beta. You can now convert a Realtime Channel into an authorized Channel using RLS policies in two steps.

Wednesday: They shared 3 new announcements for Supabase Auth: Support for third-party Auth providers, Phone-based Multi-factor Authentication (SMS and Whatsapp) & new Auth Hooks for SMS and email.

Thursday: They released Log Drains so you can export logs generated by Supabase to external destinations, like Datadog or custom HTTP endpoints.

Friday: They released support for Wasm (WebAssembly) Foreign Data Wrapper. Now anyone can create a Foreign Data Wrappers (FDW) to allow Postgres to interact with externally hosted data and share it with the Supabase community.

That’s a lot!

Learn more about all of Supabase’s Launch Week right here.

🧐 Inside the “3 billion people” NPD breach

Troy Hunt:

Usually, it’s easy to articulate a data breach; a service people provide their information to had someone snag it through an act of unauthorised access and publish a discrete corpus of information that can be attributed back to that source. But in the case of National Public Data, we’re talking about a data aggregator most people had never heard of where a “threat actor” has published various partial sets of data with no clear way to attribute it back to the source…

I’ve been collating information related to this incident over the last couple of months, so let me talk about what’s known about the incident, what data is circulating and what remains a bit of a mystery.

When Troy says he’s been collecting info for a “couple of months”, he’s not kidding. This one goes deep. My summary of his summary: it’s a giant mess. One important takeaway:

there were no email addresses in the social security number files. If you find yourself in this data breach via HIBP, there’s no evidence your SSN was leaked, and if you’re in the same boat as me, the data next to your record may not even be correct… So, treat this as informational only, an intriguing story that doesn’t require any further action.

🛠️ Dasel: one data tool to rule them all

I like this pitch:

Say good bye to learning new tools just to work with a different data format.

Dasel uses a standard selector syntax no matter the data format. This means that once you learn how to use dasel you immediately have the ability to query/modify any of the supported data types without any additional tools or effort.

It’s a lot like jq, but supports JSON, YAML, TOML, XML and CSV (with zero runtime dependencies). The only thing I can imagine that’d be better would be to use SQL instead. One less syntax for most of us to learn…

🎞️ Clip of the week: tmux is cool!

I think Nick Janetakis and myself did a pretty good job selling tmux to Adam. WDYT?

🔗 Visual data structures cheat sheets

Nick M did an excellent job visualizing (and aggregating other people’s visualizations of) key data structures that are used in real-world applications. Merkle Trees, Bloom Filters & Skip Lists (oh my)

🔨 Go is my hammer, and everything is a nail

I’m a big proponent of using “the right tool for the job”, but I also freely admit that there’s also value in finding a generally good tool that’s good enough for most jobs and just using that (most of the time). Markus is living that life with Go.

I now build basically all my software in Go, from tiny one-off tools, to web services, CLIs, and everything in between.

But why? When the common wisdom is to always take the problem at hand, analyze it, and then choose the tools, why would I ignore that and go: “nah, I’ll just use Go again”?

Well, I present to you: REASONS.

🚫 Taking a stand against names

Steve Klabnik asks a very good question:

if naming things is so hard… why do we insist on doing it all the time?

There are good answers to this question, but Steve isn’t actually saying we shouldn’t name things. What he’s proposing is there are times when you think you need to name something… and maybe you don’t. Two examples he’s had success not naming recently: code branches & CSS classes

👃 Data is just an added sense

This pairs well with my recent confession that I don’t believe too heavily in data-driven decision making. I’m not against it, I just don’t find that much value in it. In this post, Cedric Chin proposes data as an additional sense you can use to make decisions, which I think gives the category about the right amount of credence:

business operators have senses that help them navigate the running of their businesses. Most business operators use only two senses: 1) Intuition, 2) Qualitative sense-making

Data is just an added sense. It is not better or worse than the other two senses, in the same way that hearing is not necessarily superior to sight, or touch not superior to taste. It all depends on context.

That’s the news for now, but did you know Changelog++ members can now build their own custom feeds?! Feedback of this brand new feature has been overwhelmingly positive, and people have created 124 feeds already. So that’s cool…

Have a great week, forward this to a friend who might dig it & I’ll talk to you again real soon. 💚

–Jerod